Incentives: The Failure & The Fix

Welcome to the 716 new members of the curiosity tribe who have joined us since Friday. Join the 32,004 others who are receiving high-signal, curiosity-inducing content every single week. Share this on Twitter to help grow the tribe!Today’s newsletter is brought to you by Tegus!Tegus has been a complete game changer for my research and learning process. Tegus is the leading platform for primary research—it offers a searchable database of thousands of instantly-available, investor-led interviews with experts on a wide range of industries, companies, and topics. It’s fast and cost-effective, enabling you to do great primary research without breaking the bank.Special Offer: Tegus is offering a free 2-week trial to all Curiosity Chronicle subscribers—sign up below to level up your research game today!Today at a Glance:Incentives are everything—an uber-powerful force governing our interactions, organizations, and society.Unfortunately, humans are astonishingly bad at establishing incentives—we consistently create systems that invite manipulation and unintended consequences.The framework for better incentives involves six key pillars: Objectives, Metrics, Anti-Metrics, Stakes & Effects, Skin in the Game, and Clarity & Fluidity.Incentives: The Failure & The Fix“Show me the incentive and I will show you the outcome.” — Charlie MungerIncentives are everything—an uber-powerful force governing our interactions, organizations, and society.Well-designed incentives have the power to create great outcomes; poorly-designed incentives have the power to…well…create terrible outcomes.Unfortunately, humans are astonishingly bad at establishing incentives—we consistently create systems that invite manipulation and unintended consequences. More often than not, we wind up in the poorly-designed camp scrambling for answers and quick fixes.Let’s change that…In today’s piece, I will share a framework for establishing incentives (that actually create desired outcomes).Incentives: The FailureLet’s start with a basic definition of incentives:Incentives are anything that motivates, inspires, or drives an individual to act in a specific manner. They come in two forms: intrinsic and extrinsic.Intrinsic incentives are internal—created by self-interest or desire. Extrinsic incentives are external—created by outside factors, typically a reward (positive incentive) or punishment (negative incentive).For today, we'll be focusing on extrinsic incentives…In a (very) simple model, extrinsic incentives involve two key components:Measure: The metric that the individual or group will be judged upon. The measure can be quantitative (KPIs, metrics, etc.) or qualitative.Target: The level of the measure at which a reward or punishment will be initiated. The target can be specific (you receive your incentive if the KPI hits X level) or general (you receive your incentive if your manager is satisfied with your work).But there is a real problem here. This simple model of incentives—which will feel familiar if you have ever worked in the government, a large organization, or anywhere really—often leads to undesirable outcomes and unintended consequences.Goodhart’s LawGoodhart’s Law is quite simple: When a measure becomes a target, it ceases to be a good measure. If a measure of performance becomes a stated goal, humans tend to optimize for it, regardless of any associated consequences. The measure loses its value as a measure!Goodhart’s Law is named after British economist Charles Goodhart, who referenced the concept in a 1975 article on British monetary policy.“Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.” — Charles GoodhartBut the concept was popularized by anthropologist Marilyn Strathern. In a 1997 paper, she generalized the thinking and called it Goodhart’s Law.“When a measure becomes a target, it ceases to be a good measure.” — Marilyn StrathernIt became a mental model with considerable practical relevance—a phenomenon that has been observed time and again throughout history.Let's look at a few examples and use them to build a mental model for where incentives go awry.The Cobra EffectThere were too many cobras in India. The British colonists—worried about the impact of these deadly creatures—started offering bounties for cobra heads.Locals excitedly began breeding cobras, chopping off their heads, and turning them in to earn the bounties. When the breeding got out of hand, some of the breeders were forced to release the cobras onto the streets, thereby increasing the population of cobras.Clearly not what the British had in mind…The British viewed cobra heads as a simple way to measure cobra elimination, so it gave the population an incentive to deliver cobra heads. The result? Locals gamed the system, breeding cobras to earn the bounties.An incentive designed to reduce the cobra population actually increased it!Soviet NailsIn order to meet their ambitious goals, the Soviets needed to produce more nails to fuel their industrial complex.First, Soviet factories established incentives based on the number of nails produced. What happened? The workers produced thousands of tiny nails.Nevertheless, they persisted, adjusting the incentives to be based on the weight of nails produced. That should fix the tiny nails problem! What happened? The workers produced a few massive nails.In both cases, the nails were useless.The Soviet factory managers had viewed nail quantity and nail weight as easy ways to measure production, so they gave their workers incentives based on these measures. The result? A bunch of useless nails.Amazon's "Hire-to-Fire" IssueAmazon believed employee turnover was healthy—from the early days, they had created a culture where the bottom 10% should be scrubbed annually in order to continue to upgrade the talent level of the organization.To incentivize healthy employee turnover rates, it gave its managers a target rate for annual turnover.The result? Media articles about a “hire-to-fire” practice emerged. Managers had allegedly hired employees they planned to fire in order to meet their turnover targets.Clearly not what Jeff Bezos had in mind...Wells Fargo Account OpeningsWells Fargo is a new inductee of the Unintended Consequences Hall of Fame. An instant classic.Senior leadership of the bank viewed new account openings as an easy way to track business growth, so it gave its junior employees target account opening goals. Employees would be pushed to hit these goals or risk punishment.The result? Employees opened millions of fake accounts to hit their targets and Wells Fargo was fined billions for the fraud.A Mental Model for Broken IncentivesWith these examples as a backdrop, we can begin to formulate a simple, rough mental model for where incentives seem to go awry.Poorly-designed incentives typically exhibit one or more of the following three characteristics:McNamara FallacyNarrow FocusVanity > QualityThe McNamara FallacyThe McNamara Fallacy is named after Robert McNamara—the US Secretary of Defense from 1961-1968—whose over-reliance on quantitative metrics led the US astray during the Vietnam War.The McNamara Fallacy is the flawed assumption that what can't be measured isn't important. It is the tendency to make a decision based on observable, quantitative metrics while ignoring all others.It leads to a focus on measuring what is easy to measure vs. what is actually important. Cobra heads, nail quantity or weight, employee turnover, and new account openings were all easy to measure quantitatively, but totally missed the bigger picture. All four programs were victims of the McNamara Fallacy.(Note: I plan to do a full thread and newsletter piece on the McNamara Fallacy in the future, as it is a topic and history worthy of deeper discussion)Narrow FocusThe narrow focus issue is an objective scoping issue. If you think too narrowly about the desired outcomes of the program, you are more likely to create incentives that miss the forest for the trees.Using the Wells Fargo example, the desired outcome was not to have more accounts opened at the bank—more appropriate would have been to define the desired outcome as growth in the number of happy, well-serviced customers.As a rule: When in doubt, zoom out.Vanity > QualityVanity metrics—we’ve all seen them (and probably cared too much about them).The reliance on vanity metrics—like cobra heads or new account openings—that will impress superiors or the public is a recipe for disaster in incentive design.Imagine incentivizing a brand social media manager on the number of followers of the account. That person is likely to start buying followers in order to hit these targets. The vanity metric is rarely the quality metric.So with these in mind, let's craft a better framework for incentives.Incentives: The FixThe incentive framework involves six key pillars:ObjectivesMetricsAnti-MetricsStakes & EffectsSkin in the GameClarity & FluidityIt is intended to provide a structure through which to create, evaluate, and adjust incentives. Let's walk through each of the pillars…ObjectivesDeep consideration of the ultimate objectives of the incentives is critical.What does success look like? This isn’t about the surface level objectives—you need to go deeper.Without upfront deep thought on objectives, intelligent incentive design is impossible. Start here before moving on.MetricsEstablish metrics that you will measure to track success.Importantly, be sure to avoid the McNamara Fallacy—never choose metrics on the basis of what is easily measurable over what is meaningful. Just because it is easy to track a specific KPI, doesn’t mean it is the right KPI to use as a measure.If you could track and measure one metric that would tell you everything you want to know about your business or organization, what would it be?Identify a wish list of metrics with no regard for feasibility. Work backwards from there into what is possible.Anti-MetricsEven more important than the core metrics, establish "anti-metrics" that you measure to track unintended consequences. I was first tipped off to this idea by Julie Zhou (she calls them counter-metrics), who has done some exceptional thinking and writing on the topics of organizations and growth.Anti-metrics force you to consider whether your incentives are fixing one problem but creating another.In the Amazon example, an effective anti-metric may have been average tenure of newly-hired employees by cohort. If you saw this figure dipping dramatically from the start of the employee turnover incentive program, you would know something was wrong.Stakes & EffectsAs with all decisions, it is critical to consider and understand the stakes:High-Stakes = Costly Failure, Difficult to ReverseLow-Stakes = Cheap Failure, Easy to ReverseIf you are dealing with a program with high-stakes, you have to conduct a rigorous, second-order effects analysis (pro tip: read my thread on this).Iterate on your metrics and anti-metrics accordingly.Skin in the GameTo avoid principal-agent problems, the incentive designer should have skin in the game.Never allow an incentive to be implemented where the creator participates in the pleasure of the upside, but not the pain in the downside.Skin in the game improves outcomes.Clarity & FluidityAn incentive is only as effective as:The clarity of its dissemination.The ability and willingness to adjust it based on new information.Takeaway: Create even understanding playing fields for all constituents and avoid plan continuation bias.ConclusionSo to recap the fix, my framework for incentives is as follows:Objectives: Identify what success looks like. Go deep on the ultimate objectives of the program.Metrics: Establish metrics to track success. Never settle for what is easy to measure over what is meaningful.Anti-Metrics: Establish anti-metrics to determine if solving one problem is creating another.Stakes & Effects: Always consider the stakes (high or low) and adjust the rigor of your second-order effects analysis accordingly.Skin in the Game: Avoid principal-agent problems by ensuring the incentive designer has skin in the game (i.e. participates in both the pleasure and the pain).Clarity & Fluidity: Incentives are only as good as the clarity of their dissemination and the ability to adjust based on new information.I hope you find this framework as productive and helpful as I have. I’d love to see some comments about your experience with it (and any tweaks you would suggest).Sahil’s Job Board - Featured OpportunitiesHyper - Chief of Staff (NEW DROP!)Olukai - VP of E-Commerce (NEW DROP!)Consensus - Lead Software Engineer (NEW DROP!)Scaled - Direct of Operations (NEW DROP!)Fairchain - Software Engineer, Full Stack (NEW DROP!)Incandescent - Operations AssociatePractice - Chief of StaffMaven - GM of Partnerships & OpsHatch - Senior PM, Senior Product Marketing ManagerAbstractOps - Head of EngineeringOn Deck - Forum Director, CFO Forum, VP FinanceSkio - Founding EngineerMetafy - Senior Frontend DeveloperCommonstock: Community Manager, Social Media Manager, Marketing Designer, Backend EngineerSuperFarm - VP/Director Account Ops, Scrum MasterLaunch House - Community Manager, Community LeadFree Agency - Chief of StaffThe full board can be found here!We just placed a Growth Lead, Swiss Army Knife, Account Exec, and several other roles in the last month. Results for featured roles have been awesome! If you are a high-growth company in finance or tech, you can use the “Post a Job” button to get your roles up on the board and featured in future Twitter and newsletter distributions.That does it for today’s newsletter. Join the 32,000+ others who are receiving high-signal, curiosity-inducing content every single week! Until next time, stay curious, friends! This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit sahilbloom.substack.com

Welcome to the 716 new members of the curiosity tribe who have joined us since Friday. Join the 32,004 others who are receiving high-signal, curiosity-inducing content every single week. Share this on Twitter to help grow the tribe!

Today’s newsletter is brought to you by Tegus!

Tegus has been a complete game changer for my research and learning process. Tegus is the leading platform for primary research—it offers a searchable database of thousands of instantly-available, investor-led interviews with experts on a wide range of industries, companies, and topics. It’s fast and cost-effective, enabling you to do great primary research without breaking the bank.

Special Offer: Tegus is offering a free 2-week trial to all Curiosity Chronicle subscribers—sign up below to level up your research game today!

Today at a Glance:

Incentives are everything—an uber-powerful force governing our interactions, organizations, and society.

Unfortunately, humans are astonishingly bad at establishing incentives—we consistently create systems that invite manipulation and unintended consequences.

The framework for better incentives involves six key pillars: Objectives, Metrics, Anti-Metrics, Stakes & Effects, Skin in the Game, and Clarity & Fluidity.

Incentives: The Failure & The Fix

“Show me the incentive and I will show you the outcome.” — Charlie Munger

Incentives are everything—an uber-powerful force governing our interactions, organizations, and society.

Well-designed incentives have the power to create great outcomes; poorly-designed incentives have the power to…well…create terrible outcomes.

Unfortunately, humans are astonishingly bad at establishing incentives—we consistently create systems that invite manipulation and unintended consequences. More often than not, we wind up in the poorly-designed camp scrambling for answers and quick fixes.

Let’s change that…

In today’s piece, I will share a framework for establishing incentives (that actually create desired outcomes).

Incentives: The Failure

Let’s start with a basic definition of incentives:

Incentives are anything that motivates, inspires, or drives an individual to act in a specific manner. They come in two forms: intrinsic and extrinsic.

Intrinsic incentives are internal—created by self-interest or desire. Extrinsic incentives are external—created by outside factors, typically a reward (positive incentive) or punishment (negative incentive).

For today, we'll be focusing on extrinsic incentives…

In a (very) simple model, extrinsic incentives involve two key components:

Measure: The metric that the individual or group will be judged upon. The measure can be quantitative (KPIs, metrics, etc.) or qualitative.

Target: The level of the measure at which a reward or punishment will be initiated. The target can be specific (you receive your incentive if the KPI hits X level) or general (you receive your incentive if your manager is satisfied with your work).

But there is a real problem here. This simple model of incentives—which will feel familiar if you have ever worked in the government, a large organization, or anywhere really—often leads to undesirable outcomes and unintended consequences.

Goodhart’s Law

Goodhart’s Law is quite simple: When a measure becomes a target, it ceases to be a good measure. If a measure of performance becomes a stated goal, humans tend to optimize for it, regardless of any associated consequences. The measure loses its value as a measure!

Goodhart’s Law is named after British economist Charles Goodhart, who referenced the concept in a 1975 article on British monetary policy.

“Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.” — Charles Goodhart

But the concept was popularized by anthropologist Marilyn Strathern. In a 1997 paper, she generalized the thinking and called it Goodhart’s Law.

“When a measure becomes a target, it ceases to be a good measure.” — Marilyn Strathern

It became a mental model with considerable practical relevance—a phenomenon that has been observed time and again throughout history.

Let's look at a few examples and use them to build a mental model for where incentives go awry.

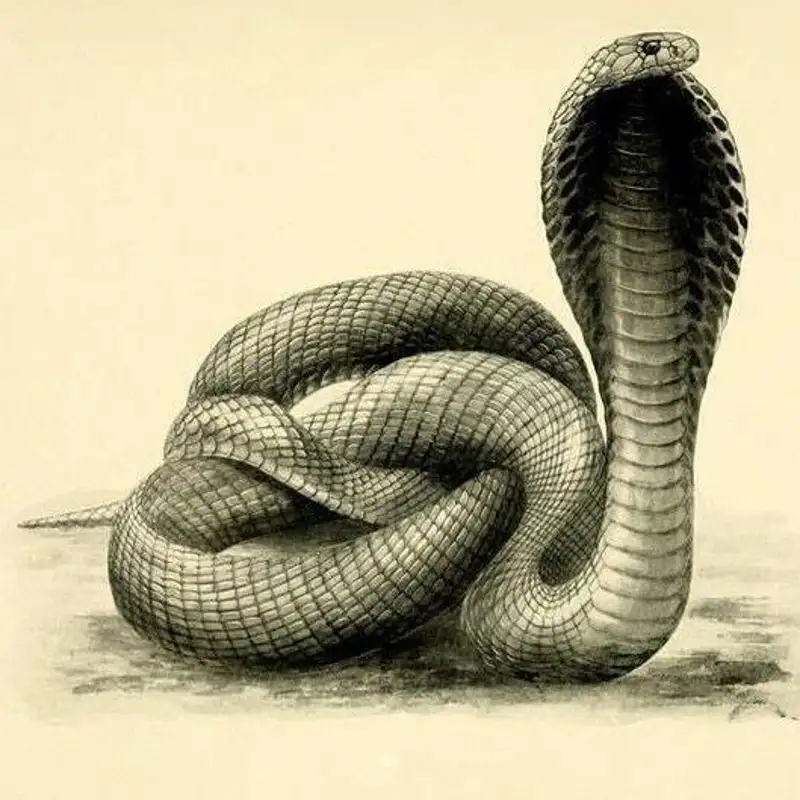

The Cobra Effect

There were too many cobras in India. The British colonists—worried about the impact of these deadly creatures—started offering bounties for cobra heads.

Locals excitedly began breeding cobras, chopping off their heads, and turning them in to earn the bounties. When the breeding got out of hand, some of the breeders were forced to release the cobras onto the streets, thereby increasing the population of cobras.

Clearly not what the British had in mind…

The British viewed cobra heads as a simple way to measure cobra elimination, so it gave the population an incentive to deliver cobra heads. The result? Locals gamed the system, breeding cobras to earn the bounties.

An incentive designed to reduce the cobra population actually increased it!

Soviet Nails

In order to meet their ambitious goals, the Soviets needed to produce more nails to fuel their industrial complex.

First, Soviet factories established incentives based on the number of nails produced. What happened? The workers produced thousands of tiny nails.

Nevertheless, they persisted, adjusting the incentives to be based on the weight of nails produced. That should fix the tiny nails problem! What happened? The workers produced a few massive nails.

In both cases, the nails were useless.

The Soviet factory managers had viewed nail quantity and nail weight as easy ways to measure production, so they gave their workers incentives based on these measures. The result? A bunch of useless nails.

Amazon's "Hire-to-Fire" Issue

Amazon believed employee turnover was healthy—from the early days, they had created a culture where the bottom 10% should be scrubbed annually in order to continue to upgrade the talent level of the organization.

To incentivize healthy employee turnover rates, it gave its managers a target rate for annual turnover.

The result? Media articles about a “hire-to-fire” practice emerged. Managers had allegedly hired employees they planned to fire in order to meet their turnover targets.

Clearly not what Jeff Bezos had in mind...

Wells Fargo Account Openings

Wells Fargo is a new inductee of the Unintended Consequences Hall of Fame. An instant classic.

Senior leadership of the bank viewed new account openings as an easy way to track business growth, so it gave its junior employees target account opening goals. Employees would be pushed to hit these goals or risk punishment.

The result? Employees opened millions of fake accounts to hit their targets and Wells Fargo was fined billions for the fraud.

A Mental Model for Broken Incentives

With these examples as a backdrop, we can begin to formulate a simple, rough mental model for where incentives seem to go awry.

Poorly-designed incentives typically exhibit one or more of the following three characteristics:

McNamara Fallacy

Narrow Focus

Vanity > Quality

The McNamara Fallacy

The McNamara Fallacy is named after Robert McNamara—the US Secretary of Defense from 1961-1968—whose over-reliance on quantitative metrics led the US astray during the Vietnam War.

The McNamara Fallacy is the flawed assumption that what can't be measured isn't important. It is the tendency to make a decision based on observable, quantitative metrics while ignoring all others.

It leads to a focus on measuring what is easy to measure vs. what is actually important. Cobra heads, nail quantity or weight, employee turnover, and new account openings were all easy to measure quantitatively, but totally missed the bigger picture. All four programs were victims of the McNamara Fallacy.

(Note: I plan to do a full thread and newsletter piece on the McNamara Fallacy in the future, as it is a topic and history worthy of deeper discussion)

Narrow Focus

The narrow focus issue is an objective scoping issue. If you think too narrowly about the desired outcomes of the program, you are more likely to create incentives that miss the forest for the trees.

Using the Wells Fargo example, the desired outcome was not to have more accounts opened at the bank—more appropriate would have been to define the desired outcome as growth in the number of happy, well-serviced customers.

As a rule: When in doubt, zoom out.

Vanity > Quality

Vanity metrics—we’ve all seen them (and probably cared too much about them).

The reliance on vanity metrics—like cobra heads or new account openings—that will impress superiors or the public is a recipe for disaster in incentive design.

Imagine incentivizing a brand social media manager on the number of followers of the account. That person is likely to start buying followers in order to hit these targets. The vanity metric is rarely the quality metric.

So with these in mind, let's craft a better framework for incentives.

Incentives: The Fix

The incentive framework involves six key pillars:

Objectives

Metrics

Anti-Metrics

Stakes & Effects

Skin in the Game

Clarity & Fluidity

It is intended to provide a structure through which to create, evaluate, and adjust incentives. Let's walk through each of the pillars…

Objectives

Deep consideration of the ultimate objectives of the incentives is critical.

What does success look like? This isn’t about the surface level objectives—you need to go deeper.

Without upfront deep thought on objectives, intelligent incentive design is impossible. Start here before moving on.

Metrics

Establish metrics that you will measure to track success.

Importantly, be sure to avoid the McNamara Fallacy—never choose metrics on the basis of what is easily measurable over what is meaningful. Just because it is easy to track a specific KPI, doesn’t mean it is the right KPI to use as a measure.

If you could track and measure one metric that would tell you everything you want to know about your business or organization, what would it be?

Identify a wish list of metrics with no regard for feasibility. Work backwards from there into what is possible.

Anti-Metrics

Even more important than the core metrics, establish "anti-metrics" that you measure to track unintended consequences. I was first tipped off to this idea by Julie Zhou (she calls them counter-metrics), who has done some exceptional thinking and writing on the topics of organizations and growth.

Anti-metrics force you to consider whether your incentives are fixing one problem but creating another.

In the Amazon example, an effective anti-metric may have been average tenure of newly-hired employees by cohort. If you saw this figure dipping dramatically from the start of the employee turnover incentive program, you would know something was wrong.

Stakes & Effects

As with all decisions, it is critical to consider and understand the stakes:

High-Stakes = Costly Failure, Difficult to Reverse

Low-Stakes = Cheap Failure, Easy to Reverse

If you are dealing with a program with high-stakes, you have to conduct a rigorous, second-order effects analysis (pro tip: read my thread on this).

Iterate on your metrics and anti-metrics accordingly.

Skin in the Game

To avoid principal-agent problems, the incentive designer should have skin in the game.

Never allow an incentive to be implemented where the creator participates in the pleasure of the upside, but not the pain in the downside.

Skin in the game improves outcomes.

Clarity & Fluidity

An incentive is only as effective as:

The clarity of its dissemination.

The ability and willingness to adjust it based on new information.

Takeaway: Create even understanding playing fields for all constituents and avoid plan continuation bias.

Conclusion

So to recap the fix, my framework for incentives is as follows:

Objectives: Identify what success looks like. Go deep on the ultimate objectives of the program.

Metrics: Establish metrics to track success. Never settle for what is easy to measure over what is meaningful.

Anti-Metrics: Establish anti-metrics to determine if solving one problem is creating another.

Stakes & Effects: Always consider the stakes (high or low) and adjust the rigor of your second-order effects analysis accordingly.

Skin in the Game: Avoid principal-agent problems by ensuring the incentive designer has skin in the game (i.e. participates in both the pleasure and the pain).

Clarity & Fluidity: Incentives are only as good as the clarity of their dissemination and the ability to adjust based on new information.

I hope you find this framework as productive and helpful as I have. I’d love to see some comments about your experience with it (and any tweaks you would suggest).

Sahil’s Job Board - Featured Opportunities

Hyper - Chief of Staff (NEW DROP!)

Olukai - VP of E-Commerce (NEW DROP!)

Consensus - Lead Software Engineer (NEW DROP!)

Scaled - Direct of Operations (NEW DROP!)

Fairchain - Software Engineer, Full Stack (NEW DROP!)

Incandescent - Operations Associate

Practice - Chief of Staff

Maven - GM of Partnerships & Ops

Hatch - Senior PM, Senior Product Marketing Manager

AbstractOps - Head of Engineering

On Deck - Forum Director, CFO Forum, VP Finance

Skio - Founding Engineer

Metafy - Senior Frontend Developer

Commonstock: Community Manager, Social Media Manager, Marketing Designer, Backend Engineer

SuperFarm - VP/Director Account Ops, Scrum Master

Launch House - Community Manager, Community Lead

Free Agency - Chief of Staff

The full board can be found here!

We just placed a Growth Lead, Swiss Army Knife, Account Exec, and several other roles in the last month. Results for featured roles have been awesome! If you are a high-growth company in finance or tech, you can use the “Post a Job” button to get your roles up on the board and featured in future Twitter and newsletter distributions.

That does it for today’s newsletter. Join the 32,000+ others who are receiving high-signal, curiosity-inducing content every single week! Until next time, stay curious, friends!

This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit sahilbloom.substack.com

Today’s newsletter is brought to you by Tegus!

Tegus has been a complete game changer for my research and learning process. Tegus is the leading platform for primary research—it offers a searchable database of thousands of instantly-available, investor-led interviews with experts on a wide range of industries, companies, and topics. It’s fast and cost-effective, enabling you to do great primary research without breaking the bank.

Special Offer: Tegus is offering a free 2-week trial to all Curiosity Chronicle subscribers—sign up below to level up your research game today!

Today at a Glance:

Incentives are everything—an uber-powerful force governing our interactions, organizations, and society.

Unfortunately, humans are astonishingly bad at establishing incentives—we consistently create systems that invite manipulation and unintended consequences.

The framework for better incentives involves six key pillars: Objectives, Metrics, Anti-Metrics, Stakes & Effects, Skin in the Game, and Clarity & Fluidity.

Incentives: The Failure & The Fix

“Show me the incentive and I will show you the outcome.” — Charlie Munger

Incentives are everything—an uber-powerful force governing our interactions, organizations, and society.

Well-designed incentives have the power to create great outcomes; poorly-designed incentives have the power to…well…create terrible outcomes.

Unfortunately, humans are astonishingly bad at establishing incentives—we consistently create systems that invite manipulation and unintended consequences. More often than not, we wind up in the poorly-designed camp scrambling for answers and quick fixes.

Let’s change that…

In today’s piece, I will share a framework for establishing incentives (that actually create desired outcomes).

Incentives: The Failure

Let’s start with a basic definition of incentives:

Incentives are anything that motivates, inspires, or drives an individual to act in a specific manner. They come in two forms: intrinsic and extrinsic.

Intrinsic incentives are internal—created by self-interest or desire. Extrinsic incentives are external—created by outside factors, typically a reward (positive incentive) or punishment (negative incentive).

For today, we'll be focusing on extrinsic incentives…

In a (very) simple model, extrinsic incentives involve two key components:

Measure: The metric that the individual or group will be judged upon. The measure can be quantitative (KPIs, metrics, etc.) or qualitative.

Target: The level of the measure at which a reward or punishment will be initiated. The target can be specific (you receive your incentive if the KPI hits X level) or general (you receive your incentive if your manager is satisfied with your work).

But there is a real problem here. This simple model of incentives—which will feel familiar if you have ever worked in the government, a large organization, or anywhere really—often leads to undesirable outcomes and unintended consequences.

Goodhart’s Law

Goodhart’s Law is quite simple: When a measure becomes a target, it ceases to be a good measure. If a measure of performance becomes a stated goal, humans tend to optimize for it, regardless of any associated consequences. The measure loses its value as a measure!

Goodhart’s Law is named after British economist Charles Goodhart, who referenced the concept in a 1975 article on British monetary policy.

“Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.” — Charles Goodhart

But the concept was popularized by anthropologist Marilyn Strathern. In a 1997 paper, she generalized the thinking and called it Goodhart’s Law.

“When a measure becomes a target, it ceases to be a good measure.” — Marilyn Strathern

It became a mental model with considerable practical relevance—a phenomenon that has been observed time and again throughout history.

Let's look at a few examples and use them to build a mental model for where incentives go awry.

The Cobra Effect

There were too many cobras in India. The British colonists—worried about the impact of these deadly creatures—started offering bounties for cobra heads.

Locals excitedly began breeding cobras, chopping off their heads, and turning them in to earn the bounties. When the breeding got out of hand, some of the breeders were forced to release the cobras onto the streets, thereby increasing the population of cobras.

Clearly not what the British had in mind…

The British viewed cobra heads as a simple way to measure cobra elimination, so it gave the population an incentive to deliver cobra heads. The result? Locals gamed the system, breeding cobras to earn the bounties.

An incentive designed to reduce the cobra population actually increased it!

Soviet Nails

In order to meet their ambitious goals, the Soviets needed to produce more nails to fuel their industrial complex.

First, Soviet factories established incentives based on the number of nails produced. What happened? The workers produced thousands of tiny nails.

Nevertheless, they persisted, adjusting the incentives to be based on the weight of nails produced. That should fix the tiny nails problem! What happened? The workers produced a few massive nails.

In both cases, the nails were useless.

The Soviet factory managers had viewed nail quantity and nail weight as easy ways to measure production, so they gave their workers incentives based on these measures. The result? A bunch of useless nails.

Amazon's "Hire-to-Fire" Issue

Amazon believed employee turnover was healthy—from the early days, they had created a culture where the bottom 10% should be scrubbed annually in order to continue to upgrade the talent level of the organization.

To incentivize healthy employee turnover rates, it gave its managers a target rate for annual turnover.

The result? Media articles about a “hire-to-fire” practice emerged. Managers had allegedly hired employees they planned to fire in order to meet their turnover targets.

Clearly not what Jeff Bezos had in mind...

Wells Fargo Account Openings

Wells Fargo is a new inductee of the Unintended Consequences Hall of Fame. An instant classic.

Senior leadership of the bank viewed new account openings as an easy way to track business growth, so it gave its junior employees target account opening goals. Employees would be pushed to hit these goals or risk punishment.

The result? Employees opened millions of fake accounts to hit their targets and Wells Fargo was fined billions for the fraud.

A Mental Model for Broken Incentives

With these examples as a backdrop, we can begin to formulate a simple, rough mental model for where incentives seem to go awry.

Poorly-designed incentives typically exhibit one or more of the following three characteristics:

McNamara Fallacy

Narrow Focus

Vanity > Quality

The McNamara Fallacy

The McNamara Fallacy is named after Robert McNamara—the US Secretary of Defense from 1961-1968—whose over-reliance on quantitative metrics led the US astray during the Vietnam War.

The McNamara Fallacy is the flawed assumption that what can't be measured isn't important. It is the tendency to make a decision based on observable, quantitative metrics while ignoring all others.

It leads to a focus on measuring what is easy to measure vs. what is actually important. Cobra heads, nail quantity or weight, employee turnover, and new account openings were all easy to measure quantitatively, but totally missed the bigger picture. All four programs were victims of the McNamara Fallacy.

(Note: I plan to do a full thread and newsletter piece on the McNamara Fallacy in the future, as it is a topic and history worthy of deeper discussion)

Narrow Focus

The narrow focus issue is an objective scoping issue. If you think too narrowly about the desired outcomes of the program, you are more likely to create incentives that miss the forest for the trees.

Using the Wells Fargo example, the desired outcome was not to have more accounts opened at the bank—more appropriate would have been to define the desired outcome as growth in the number of happy, well-serviced customers.

As a rule: When in doubt, zoom out.

Vanity > Quality

Vanity metrics—we’ve all seen them (and probably cared too much about them).

The reliance on vanity metrics—like cobra heads or new account openings—that will impress superiors or the public is a recipe for disaster in incentive design.

Imagine incentivizing a brand social media manager on the number of followers of the account. That person is likely to start buying followers in order to hit these targets. The vanity metric is rarely the quality metric.

So with these in mind, let's craft a better framework for incentives.

Incentives: The Fix

The incentive framework involves six key pillars:

Objectives

Metrics

Anti-Metrics

Stakes & Effects

Skin in the Game

Clarity & Fluidity

It is intended to provide a structure through which to create, evaluate, and adjust incentives. Let's walk through each of the pillars…

Objectives

Deep consideration of the ultimate objectives of the incentives is critical.

What does success look like? This isn’t about the surface level objectives—you need to go deeper.

Without upfront deep thought on objectives, intelligent incentive design is impossible. Start here before moving on.

Metrics

Establish metrics that you will measure to track success.

Importantly, be sure to avoid the McNamara Fallacy—never choose metrics on the basis of what is easily measurable over what is meaningful. Just because it is easy to track a specific KPI, doesn’t mean it is the right KPI to use as a measure.

If you could track and measure one metric that would tell you everything you want to know about your business or organization, what would it be?

Identify a wish list of metrics with no regard for feasibility. Work backwards from there into what is possible.

Anti-Metrics

Even more important than the core metrics, establish "anti-metrics" that you measure to track unintended consequences. I was first tipped off to this idea by Julie Zhou (she calls them counter-metrics), who has done some exceptional thinking and writing on the topics of organizations and growth.

Anti-metrics force you to consider whether your incentives are fixing one problem but creating another.

In the Amazon example, an effective anti-metric may have been average tenure of newly-hired employees by cohort. If you saw this figure dipping dramatically from the start of the employee turnover incentive program, you would know something was wrong.

Stakes & Effects

As with all decisions, it is critical to consider and understand the stakes:

High-Stakes = Costly Failure, Difficult to Reverse

Low-Stakes = Cheap Failure, Easy to Reverse

If you are dealing with a program with high-stakes, you have to conduct a rigorous, second-order effects analysis (pro tip: read my thread on this).

Iterate on your metrics and anti-metrics accordingly.

Skin in the Game

To avoid principal-agent problems, the incentive designer should have skin in the game.

Never allow an incentive to be implemented where the creator participates in the pleasure of the upside, but not the pain in the downside.

Skin in the game improves outcomes.

Clarity & Fluidity

An incentive is only as effective as:

The clarity of its dissemination.

The ability and willingness to adjust it based on new information.

Takeaway: Create even understanding playing fields for all constituents and avoid plan continuation bias.

Conclusion

So to recap the fix, my framework for incentives is as follows:

Objectives: Identify what success looks like. Go deep on the ultimate objectives of the program.

Metrics: Establish metrics to track success. Never settle for what is easy to measure over what is meaningful.

Anti-Metrics: Establish anti-metrics to determine if solving one problem is creating another.

Stakes & Effects: Always consider the stakes (high or low) and adjust the rigor of your second-order effects analysis accordingly.

Skin in the Game: Avoid principal-agent problems by ensuring the incentive designer has skin in the game (i.e. participates in both the pleasure and the pain).

Clarity & Fluidity: Incentives are only as good as the clarity of their dissemination and the ability to adjust based on new information.

I hope you find this framework as productive and helpful as I have. I’d love to see some comments about your experience with it (and any tweaks you would suggest).

Sahil’s Job Board - Featured Opportunities

Hyper - Chief of Staff (NEW DROP!)

Olukai - VP of E-Commerce (NEW DROP!)

Consensus - Lead Software Engineer (NEW DROP!)

Scaled - Direct of Operations (NEW DROP!)

Fairchain - Software Engineer, Full Stack (NEW DROP!)

Incandescent - Operations Associate

Practice - Chief of Staff

Maven - GM of Partnerships & Ops

Hatch - Senior PM, Senior Product Marketing Manager

AbstractOps - Head of Engineering

On Deck - Forum Director, CFO Forum, VP Finance

Skio - Founding Engineer

Metafy - Senior Frontend Developer

Commonstock: Community Manager, Social Media Manager, Marketing Designer, Backend Engineer

SuperFarm - VP/Director Account Ops, Scrum Master

Launch House - Community Manager, Community Lead

Free Agency - Chief of Staff

The full board can be found here!

We just placed a Growth Lead, Swiss Army Knife, Account Exec, and several other roles in the last month. Results for featured roles have been awesome! If you are a high-growth company in finance or tech, you can use the “Post a Job” button to get your roles up on the board and featured in future Twitter and newsletter distributions.

That does it for today’s newsletter. Join the 32,000+ others who are receiving high-signal, curiosity-inducing content every single week! Until next time, stay curious, friends!

This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit sahilbloom.substack.com